How Terrifying the Dream Is:A Word Embedding Approach

This project uses word embedding technology to quantitatively analyze and measure the emotional tone of dream descriptions, revealing the underlying sentiments of our subconscious narratives.

Word Embedding

Word embedding is a technique in natural language processing (NLP) and machine learning where words or phrases are represented as vectors of real numbers. These vectors capture semantic and syntactic relationships between words, allowing machines to process and understand language more effectively.

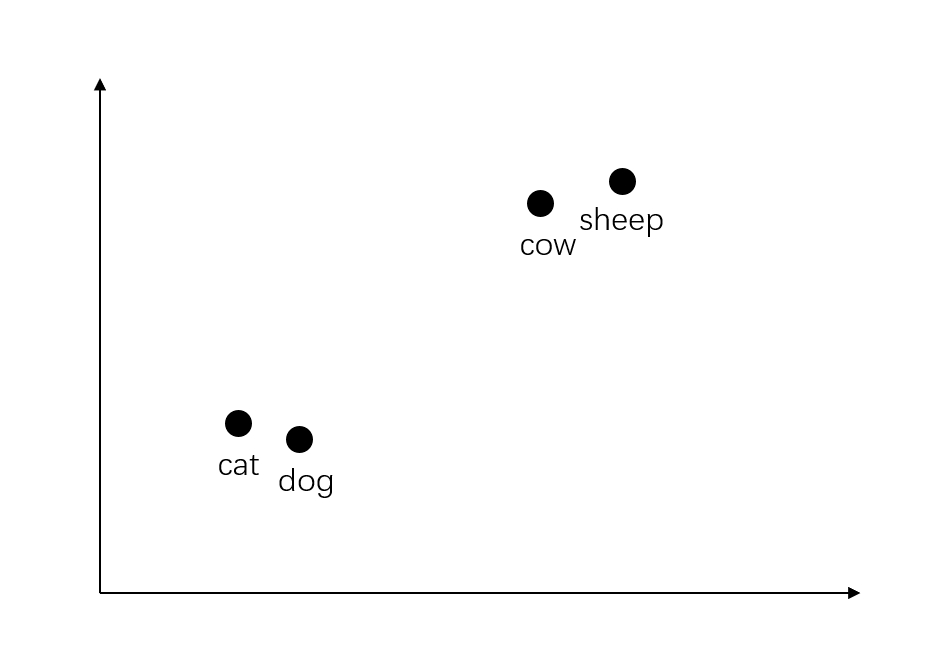

Suppose we are calculating a text that contains a total of four words: cat, dog, cow, sheep. Each position in the vector represents a word.

A standard approach to measure the similarity between embedding vectors is to compute their cosine similarity. To measure the similarity between two embedding vectors using cosine similarity, the formula is:

\[\text{cosine similarity}(\vec{A}, \vec{B}) = \frac{\vec{A} \cdot \vec{B}}{\|\vec{A}\| \|\vec{B}\|}\]where:

- \(\vec{A}\) and \(\vec{B}\) are the embedding vectors.

- \(\vec{A} \cdot \vec{B}\) is the dot product of vectors \(\vec{A}\) and \(\vec{B}\).

- \(\|\vec{A}\|\) and \(\|\vec{B}\|\) are the magnitudes (or norms) of vectors \(\vec{A}\) and \(\vec{B}\), respectively.

How Terrifying the Dream Is: A Word Embedding Approach

Using a rich textual dataset comprising over 30,000 dream descriptions from Kaggle, we are able to train word embedding models. This diverse dataset encompasses a wide spectrum of dream narratives, ranging from mundane everyday occurrences to surreal fantasies, from spine-chilling nightmares to blissful reveries. Such variety enabled our models to capture the intricate nuances and multifaceted nature of dreams. The trained models are not only capable of identifying semantically similar words within dream texts but also further reveal the profound implications embedded within dreams.For instance, the features and characteristics of dreams: Is it a pleasant dream or a nightmare? To what extent is it a nightmare?

Modelling approach

When delving into textual data describing dreams, we can employ word embedding models. Algorithms such as Word2vec or GloVe are utilized to transform all dream-related textual data into word vectors within a high-dimensional space. These word vectors capture the semantic and syntactic information of words, thereby laying the groundwork for subsequent analysis.

In some studies, we have observed that through word embedding techniques, scholars are able to measure the degree of stigmatization of diseases (Best & Arseniev-Koehler, 2023), the level of novelty in texts (Zhou, 2022), and the morality of the political rhetoric (Kraft & Klemmensen, 2024), among other aspects.

Following their approaches, once the model training is complete, we can conduct a series of exploratory analyses to assess the dream descriptions. For instance, we can calculate the cosine similarity between word vectors to explore how common concepts in dreams are interrelated. Furthermore, by analyzing the average of word vectors, we can evaluate the emotional tendencies and themes of the entire dream narrative.

Training our Own Embedding

Through the implementation of word embedding techniques, we have successfully developed a preliminary methodology for quantifying the perceived terror level in dream narratives. However, it is crucial to acknowledge that the aforementioned code serves merely as a proof of concept and exhibits several limitations that warrant further refinement.

Firstly, the constrained scope of our training corpus may impede the model’s ability to discern nuanced semantic variations or potentially introduce unintended biases. This limitation could be addressed by expanding and diversifying the training dataset to encompass a broader range of dream descriptions and emotional contexts.

Secondly, the reliance on a single lexical item—”terrifying”—as the sole metric for terror assessment presents an oversimplified approach to a complex psychological phenomenon. Dreams often evoke a spectrum of emotions and sensations that may not be adequately captured by a unidimensional measure.

To enhance the robustness and validity of our model, future iterations could rely on pre-trained word embeddings (GloVe), or incorporate pre-trained large language models like BERT, GPT, or Gemma through transfer learning and fine-tuning could significantly improve the model’s language understanding capabilities. Optimizing the model architecture by introducing attention mechanisms or adopting Transformer-based structures would allow for better capture of complex contextual relationships. Expanding and augmenting the training dataset, combined with advanced regularization methods and curriculum learning strategies, could further enhance the model’s generalization ability. Finally, incorporating domain-specific knowledge and targeted fine-tuning would enable the model to better adapt to specific task requirements. The combined application of these optimization measures would substantially improve the model’s performance across various complex language understanding tasks, making it more reliable and efficient.

Furthermore, the incorporation of advanced natural language processing techniques, such as sentiment analysis and emotion detection algorithms, could provide a more nuanced understanding of the emotional landscape within dream narratives. This multifaceted approach would not only improve the accuracy of terror quantification but also offer insights into the broader emotional and cognitive aspects of dream content.